Enhancing Drone.io with System Containers

September 24, 2019

Drone is a modern continuous-integration system built with a containers-first architecture. As such, Drone’s active components run within Docker containers, and the same applies to most of the continuous-integration (CI) pipelines that it executes.

After playing around with various CI/CD solutions, we have learned to appreciate the simplicity of Drone’s ecosystem, and the flexibility that it offers by enabling self-hosted / customized deployments.

Nevertheless, we have found some challenges when trying to expedite our own CI pipeline execution, while keeping our build infrastructure secure. And this seems to be a common pattern across various organizations that we have interacted with.

After doing some research we found that the problem comes down to the approach utilized by Drone to interact with the Docker daemon (a.k.a. dockerd) in charge of executing CI pipeline tasks.

Our objective here is to spell out the pros & cons of the existing approaches, and to propose a more efficient and secure method through the utilization of Nestybox’s system containers.

Contents

- Drone & Dockerd interaction

- Proposed Solution

- Proposed Solution Setup

- Proposed Solution Test

- Check our Free Trial!

Drone & Dockerd interaction

In Drone’s multi-agent setups, the drone-server hands out pipeline

tasks to its connected drone-agents. In agentless scenarios, the

drone-server itself takes care of executing the required pipeline

steps. In either case, there is always some level of interaction

between Drone binaries and a Docker daemon to spawn slave

containers that can execute CI pipeline tasks.

There are a couple of well-known approaches being utilized for this interaction:

-

DooD (Docker-out-of-Docker): Drone makes use of the dockerd process that seats in the host system by virtue of a bind-mount of its IPC socket.

-

DinD (Docker-in-Docker): Drone interacts with the dockerd process present in the slave container itself.

We have fully covered the differences between DinD and DooD solutions in a previous article. Thus, our goal here will be to analyze the pros & cons of these approaches as they are utilized by Drone, and how they can impact Drone’s overall performance.

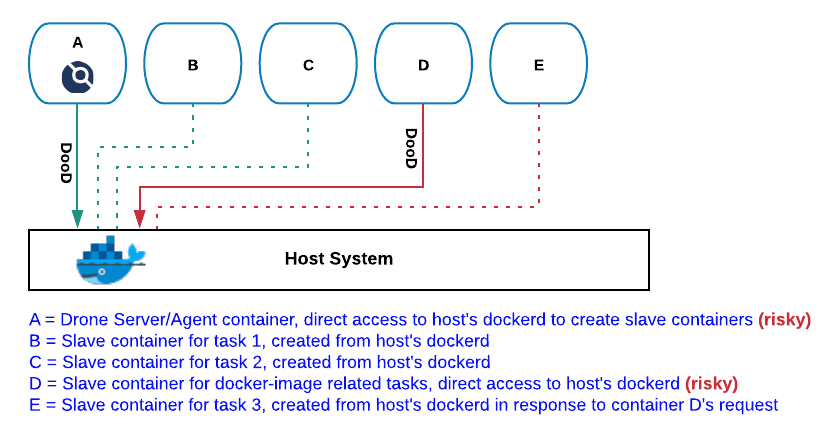

Drone’s DooD Configuration

In this configuration, all the Drone components that require Docker services refer to the host’s dockerd process. This applies to the docker-server and docker-agent containers, as well as slave containers that require dockerd interaction to complete their tasks (i.e. “docker build”, “docker push”, etc).

For example, in the image below, the drone-server (or drone-agent) in container A interacts with the host Docker daemon to launch slaves containers B, C and D. Container D’s goal is to build a docker image, and for this to happen D makes use of the host Docker daemon to launch container E.

This Drone configuration has one important advantage over the Dood +

DinD one described below: image-caching. By making use of a

centralized and persistent Docker daemon we can drastically reduce the

building time required to execute Drone’s docker-pipelines. Previously

imported image layers will be kept in Docker’s caching subsystem,

which will dramatically expedite subsequent build tasks.

Unfortunately, this comes at a high cost, as we are exposing the host’s Docker daemon to Drone’s build environment. This is a non-starter for publicly hosted drone instances (e.g. drone.io), but it also poses a problem for private deployments with security requirements.

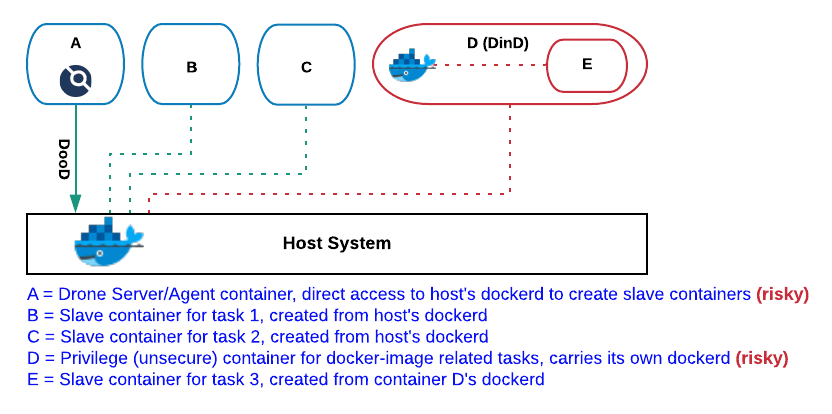

Drone’s DooD + DinD Configuration

As in DooD configuration, in this configuration Drone interacts with

the host’s dockerd to spawn slave containers. However, for tasks that

require docker-image handling, Drone relies on popular plugins such as

drone-docker, which

incorporates a dockerd in its container image.

This approach attempts to address DooD configuration security concerns, but it does so at the expense of the image-caching variable mentioned above. This a consequence of the life-span of the dockerd instance being utilized in the inner container (container D in the image below), matching the one of the task being executed (i.e. creation of container E as part of “docker build” instruction). Thereby, we cannot reuse previously built layers, nor can we push the image being produced into any local / centralized cache, so subsequent builds won’t be ever able to leverage image-caching capabilities.

Unfortunately, without image-caching, many docker-based pipelines are not tenable, as a comparatively large chunk of the image-compilation time is usually spent fetching the required image layer dependencies.

Last but not least, notice that for this approach to work, the DinD container must be initialized with privileged flag, which as described here, poses a serious security risk.

So isn’t there a better solution that can truly conjugate building-efficiency and system-security requirements? Yes, there is …

Proposed Solution

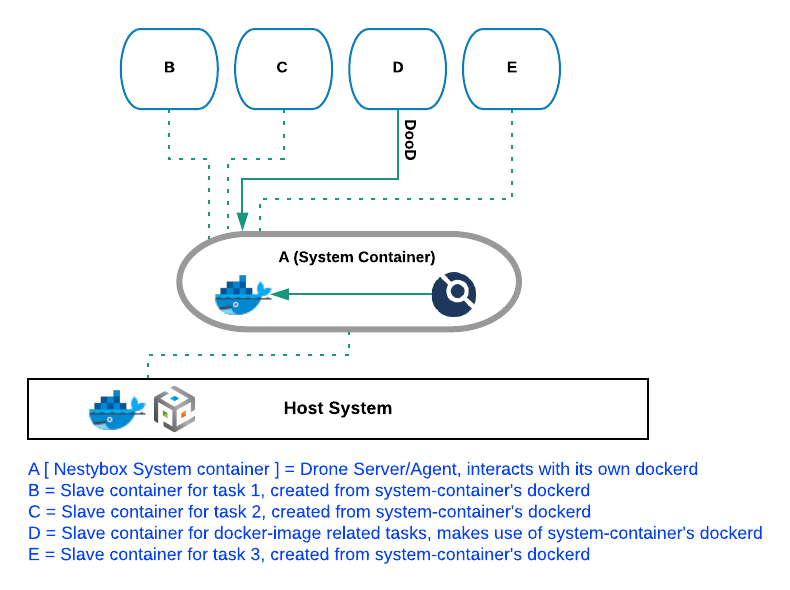

Our proposed solution relies on the use of Nestybox’s system containers and the application of the following basic guidelines:

-

Usage of system containers to enclose the drone-agent and drone-server binaries.

-

Make use of the system container’s dockerd for all pipeline tasks that require container interaction. Host’s dockerd is exclusively used to bring up the system containers hosting the drone-server and/or drone-agent binaries.

-

Avoid usage of

drone-dockerplugin due to the privileged requirement mentioned above. Instead, we suggest to define docker-pipelines by explicitly typing the required docker instructions. -

Bind-mount system-container’s Docker socket into the containers launched for image-building purposes.

See below a visual representation of the solution just described.

What do we gain with this?

-

Enhanced security:

- By enclosing Drone binaries in system containers we are boosting the system defenses. Refer to this link for more details.

- Host isolation. No single resource in the host system needs to be exposed (bind-mounted) into the Drone ecosystem.

- No slave container is ever launched with privileged

capabilities as there is no need for this to run nested

dockerd instances.

-

Pipeline efficiency:

- The Docker daemon held within the system container offers both

uniqueness and persistence to all its Docker

clients. This dramatically improves Drone’s pipeline

throughput by providing image-caching functionality.

- The Docker daemon held within the system container offers both

uniqueness and persistence to all its Docker

clients. This dramatically improves Drone’s pipeline

throughput by providing image-caching functionality.

-

Integrated & portable ecosystem:

By making use of system containers to host Drone binaries, users now have total flexibility to install applications that can boost the CI/CD experience, such as:

- Telegraf: To extract building metrics out of Drone’s own dockerd.

- Cadvisor: To collect per-container detailed resource utilization stats.

- Prometheus: To display collected information and store it in a time-series DB.

- Static-Analysis tools: To asses code-quality right from the

path where the VCS repositories are cloned – it wouldn’t make

much sense to have these tools running in the host system.

Note that some of these applications require privileged access to the Docker socket to collect information, which by itself sounds like a risky business. Luckily, with this solution we are dealing with a dedicated Docker daemon so there’s not much to worry about.

Once the desired setup is built, all that is left is to save the system container’s image in our desired image repository. At this point we have built a truly self-contained CI/CD ecosystem, that is efficient, secure and portable.

Proposed Solution Setup

The solution discussed above can be easily tested through the execution of the following docker-compose recipe, and by creating a Drone pipeline such as the one displayed further below.

Notice that there are only three minor differences between the docker-compose recipe below and the one of traditional approaches:

-

In the image field we are introducing customized

drone-serveranddrone-agentcontainers that incorporate a Docker daemon binary. These images are available in our public DockerHub repository and the corresponding Dockerfiles are here. -

The runtime flag is now present to request the utilization of Nestybox’s container runtime: sysbox-runc.

-

Finally, see that no resource needs to be bind-mounted into Drone’s infrastructure.

Note that technically speaking, in Drone’s multi-agent setups only the drone-agent container needs the presence of a Docker daemon. Thus, for the drone-server configuration below, we could have just relied on the traditional docker-compose recipe (i.e. no need for a customized image and runtime); we are displaying them here to cover the agentless scenario too.

$ cat docker-compose.yml

version: '2.3'

services:

drone-server:

image: nestybox/ubuntu-bionic-drone-server:latest

runtime: sysbox-runc

ports:

- 80:80

environment:

- DRONE_OPEN=true

- DRONE_GITHUB=true

- DRONE_GITHUB_SERVER=https://github.com

- DRONE_SERVER_HOST=my-drone.server.com

- DRONE_SERVER=http://my-drone.server.com

- DRONE_GITHUB_CLIENT_ID=da96f2060001a68100ed

- DRONE_GITHUB_CLIENT_SECRET=4601234fe84b5b738a2954b40ecf03ece01231a1

- DRONE_RPC_SECRET=my-secret

- DRONE_AGENTS_ENABLED=true

- DRONE_USER_CREATE=username:nestybox,admin:true,token:55f24eb3d61ef6ac5e83d55017860000

drone-agent:

image: nestybox/ubuntu-bionic-drone-agent:latest

runtime: sysbox-runc

restart: always

depends_on:

- drone-server

environment:

- DRONE_SERVER_HOST=my-drone.server.com:80

- DRONE_RPC_SECRET=my-secret

In terms of pipeline definition we should take the following points into account:

-

We are not using Drone’s

docker-pluginfor the reasons discussed above (i.e. “privilege” requirement). Instead, we are utilizing a regular (non-privilege) container with Docker CLI and/or Docker SDK functionality. -

We are bind-mounting the system container’s Docker socket into the slave container that will be executing the pipeline tasks (DooD approach).

$ cat .drone.yml

...

steps:

- name: build

image: golang:latest

commands:

- go build

- name: docker-build

image: docker

volumes:

- name: cache

path: /var/run/docker.sock

commands:

- docker build -t my-repo/new-image .

volumes:

- name: cache

host:

path: /var/run/docker.sock

Refer to this simple GitHub repository (forked off of Drone-demos project) if need more details about the docker-compose recipe and the pipeline configuration being utilized in this example.

Proposed Solution Test

The scenario described above can be easily brought up by following these basic steps:

-

Install Nestybox’s runtime. Please refer to our web-page for a free trial.

-

Make use of GitHub UI to fork this repository to your personal GitHub workspace. You will be expected to make minor changes to this repo to test the CI pipeline execution.

-

Clone your newly imported repository:

$ git clone https://github.com/<user-github-id>/drone-with-go.git -

Adjust the docker-compose file with your own desired parameters (i.e. drone-server url, port, etc). You will also need to grant your to-be-created Drone server with the proper permissions to interact with Github: see Step-1 here for more details.

-

Launch docker-compose to spawn the Drone setup – by default we are creating two system-containers corresponding to a drone-server and a drone-agent.

-

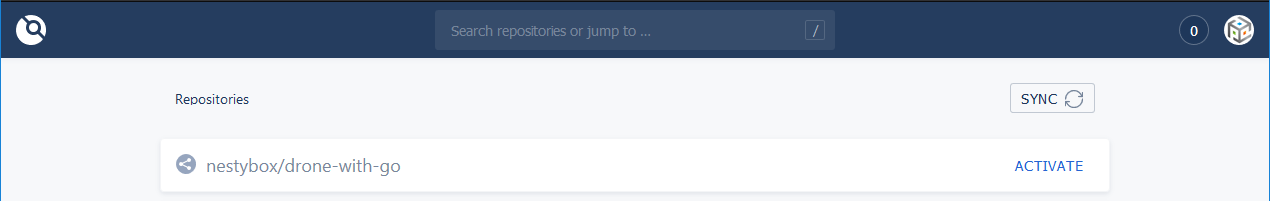

Open a web-browser and type your drone-server URL. If the setup is properly configured you should see something like this:

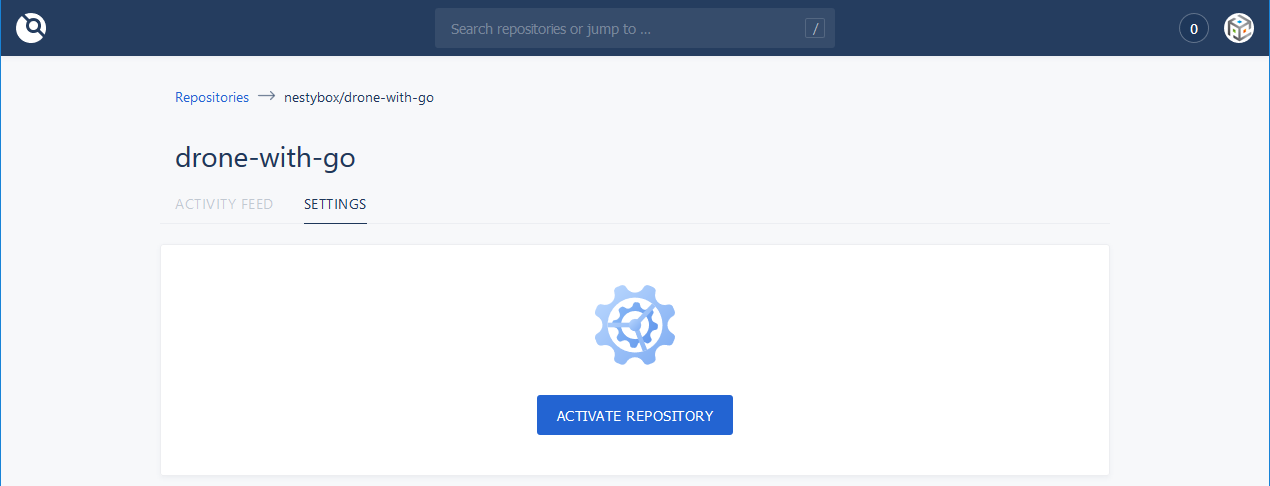

- Activate the test repository that we previously forked/cloned:

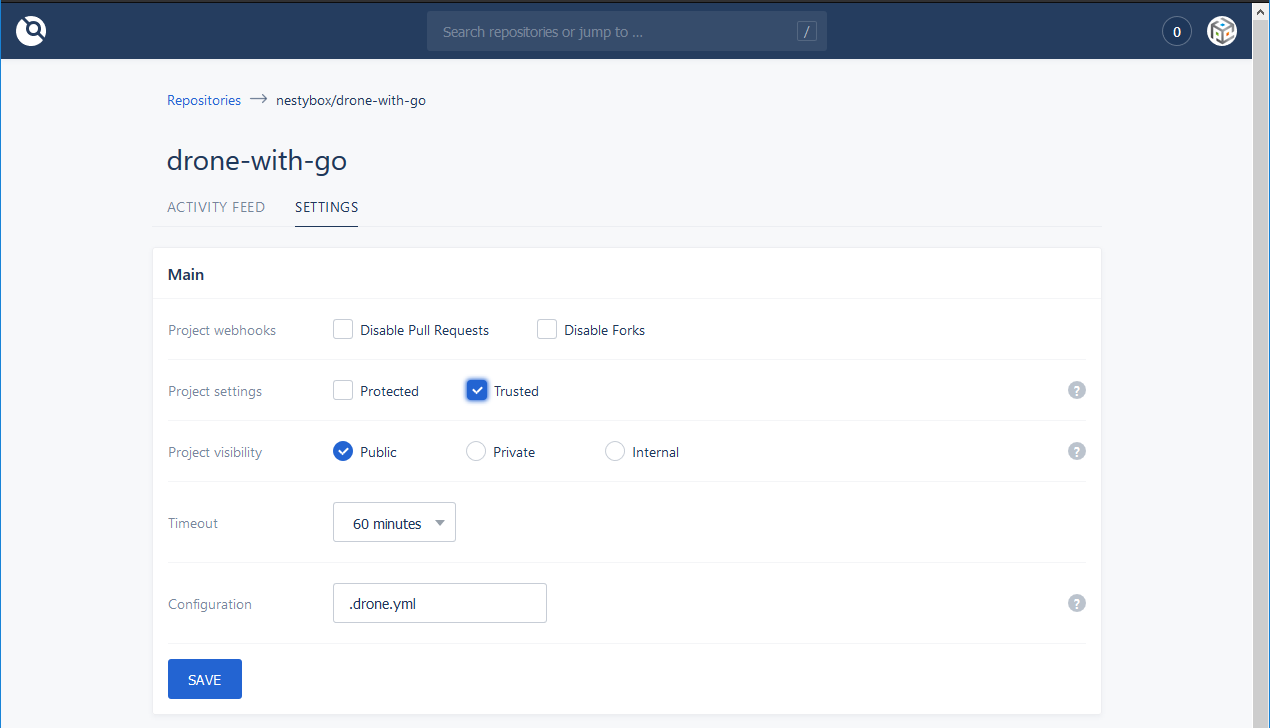

- In settings tab, turn on the Trusted flag to allow the mount of the Docker socket within the Drone system containers:

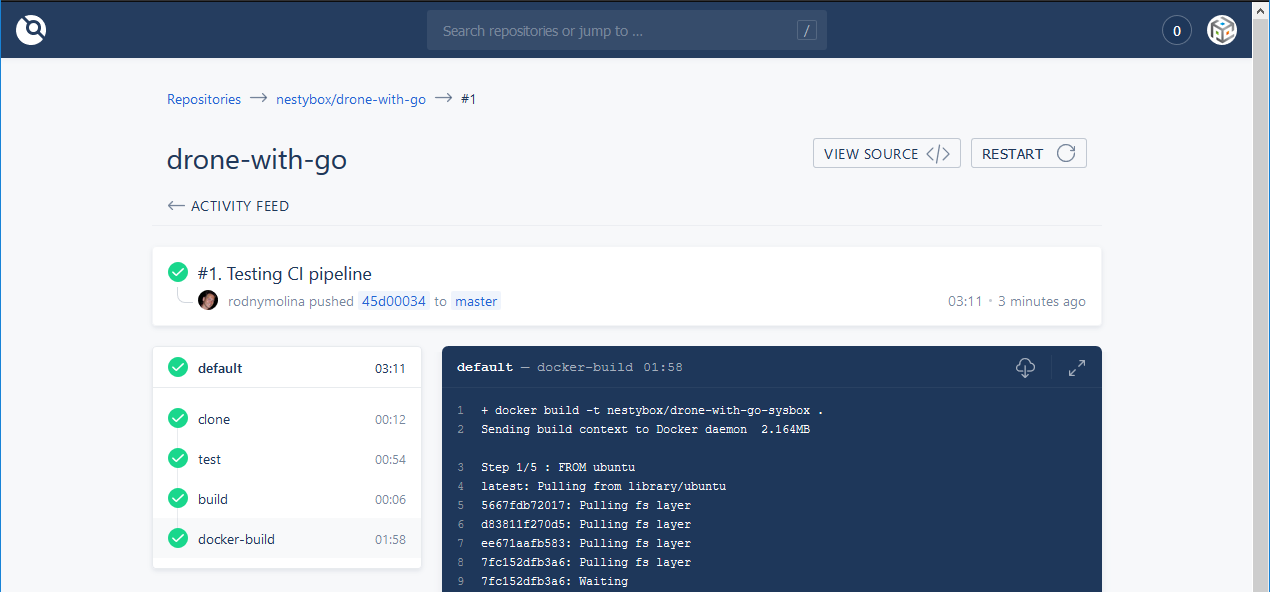

- Push a change into this repo to verify the correct behavior of the pipeline. If everything works as expected you should see something like this:

That’s all, hope it helps!

Check our Free Trial!

If you want to give our suggested proposal a shot, please refer to this link for a free-trial. Your feedback will be much appreciated!