Secure Docker-in-Docker with System Containers

September 14, 2019

Docker containers are great at running application micro-services. But can you run Docker itself inside a Docker container?

This article describes Docker-in-Docker, the use cases for it, pros & cons of existing solutions, and how Nestybox provides a solution that allows you to run Docker-in-Docker securely, without using privileged containers.

Docker users (e.g., app developers, QA engineers, and DevOps) will find this info useful.

Note that this article is specific to Docker containers on Linux.

See it work!

If you want to see how easy it is to deploy Docker-in-Docker securely using a Nestybox system container, check this screencast:

Other Nestybox blog posts show even more examples.

Contents

- What is Docker-in-Docker?

- Use Cases

- Docker-in-Docker Blues

- Docker-in-Docker Approaches

- Secure Docker-in-Docker with Nestybox

- In a Nutshell

- Checkout our Free Trial!

What is Docker-in-Docker?

Docker-in-Docker, also known as DinD, is just what it says: running Docker inside a Docker container.

This implies that the Docker instance inside the container would be able to build containers and also run them.

Use Cases

So when would running Docker-in-Docker be useful? Turns out there are a few valid scenarios such as:

-

Continuous Integration (CI) pipeline

DinD in CI pipelines is the most common use case. It shows up when a Docker container is tasked with building or running Docker containers. For example, in a Jenkins pipeline, the agent may be a Docker container tasked with building or running other Docker containers. This requires Docker in Docker.

But CI is not the only use case. Another common use case is developers that want to play around with Docker containers in a sandboxed environment, isolated from their host environment where they do real work. In this case Docker-in-Docker is a great solution.

Docker-in-Docker Blues

You would think that running Docker inside a container should work without problems. After all, containers run programs in isolation and Docker is just another program.

But things are a bit more complex. Docker is not a regular application like the ones that typically run inside containers. It’s a system-level program that has deep interactions with the filesystem and the Linux kernel.

It turns out system-level programs don’t always run inside Docker containers because of a variety of low-level technical reasons.

In the case of Docker specifically, it does not run inside a regular Docker container because the container does not expose sufficient kernel resources and appropriate permissions. In addition, there are issues related to Docker’s use of overlay filesystems, security profiles, etc., that don’t work well inside a container.

This blog article by Jérôme Petazzoni (until recently a developer at Docker) describes some of these problems and even recommends that Docker-in-Docker be avoided.

But things have improved a bit since the article was written and Docker now officially supports running Docker-in-Docker (although it requires an unsecure container as described below).

In addition, Nestybox system containers have been specifically designed to address these problems while ensuring the container is secure.

Docker-in-Docker Approaches

Currently, there are two well known options to run Docker inside a container:

-

Running the Docker daemon inside a container, using Docker’s DinD container image.

-

Running only the Docker CLI (or Docker SDK) in a container, and connecting it to the Docker daemon on the host. This approach has been nicknamed Docker-out-of-Docker (DooD).

I will first describe each of these approaches and their respective benefits and drawbacks.

I will then describe how Nestybox system containers offer an alternative option that overcomes the shortcomings of these other options.

Docker-in-Docker (DinD)

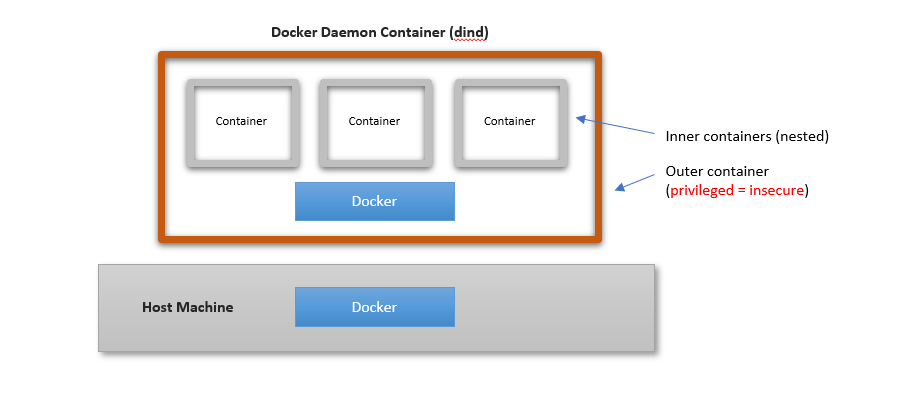

In the DinD approach, the Docker daemon runs inside a container and any (child) containers it creates exist inside said container (i.e., child containers are nested inside the parent container).

Docker provides a DinD container image that comes with a Docker daemon inside of it.

It’s very easy to setup, but there’s a catch: it requires that the Docker daemon container be configured as a “privileged” container, as shown below.

Running a privileged container reduces isolation between the container and the underlying host and creates security risks, because the init process inside the container runs with the same privileges as the root user on the host. See here and here for detailed explanations on the drawbacks.

It may be a viable (though risky) solution in trusted environments, but it’s not a viable solution in environments where you don’t trust the workloads running inside the DinD container.

For this reason, use of DinD is generally not recommended by Docker (even though it’s officially supported).

Docker-out-of-Docker (DooD)

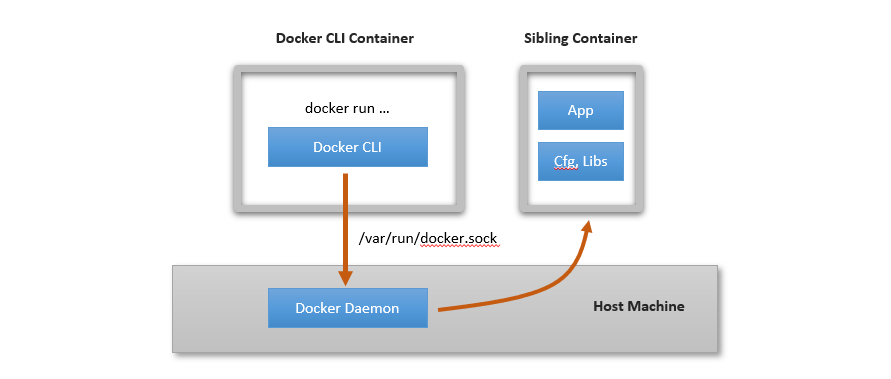

In the DooD approach, only the Docker CLI runs in a container and connects to the Docker daemon on the host. The connection is done by mounting the host’s Docker’s socket into the container that runs the Docker CLI. For example:

$ docker run -it -v /var/run/docker.sock:/var/run/docker.sock docker

In this approach, containers created from within the Docker CLI container are actually sibling containers (spawned by the Docker daemon in the host). There is no Docker daemon inside a container and thus no container nesting. It has been nicknamed Docker-out-of-Docker (DooD).

The figure below illustrates this:

This approach has some benefits but also important drawbacks.

One key benefit is that it bypasses the complexities of running the Docker daemon inside a container and does not require an unsecure privileged container.

It also avoids having multiple Docker image caches in the system (since there is only one Docker daemon on the host), which may be good if your system is constrained on storage space.

But it has important drawbacks too.

The main drawback is that it results in poor context isolation because the Docker CLI runs within a different context than the Docker daemon. The former runs within the container’s context; the latter runs within host’s context. This leads to problems such as:

-

Container naming collisions: if the container running the Docker CLI creates a container named

some_cont, the creation will fail ifsome_contalready exists on the host. Avoiding such naming collisions may not always trivial depending on the use case. -

Mount paths: if the container running the Docker CLI creates a container with a bind mount, the mount path must be relative to the host (as otherwise the host Docker daemon on the host won’t be able to perform the mount correctly).

-

Port mappings: if the container running the Docker CLI creates a container with a port mapping, the port mapping occurs at the host level, potentially colliding with other port mappings.

This approach is also not a good idea if the containerized Docker is orchestrated by Kubernetes. In this case, any containers created by the containerized Docker CLI will not be encapsulated within the associated Kubernetes pod, and will thus be outside of Kubernetes’ visibility and control.

Finally, there are security concerns too: the container running the Docker CLI can manipulate any containers running on the host. It can remove containers created by other entities on the host, or even create unsecure privileged containers putting the host at risk.

Depending on your use case and environment, these drawbacks may void use of this approach.

For more info on these issues, check these excellent articles from Applatix (now Intuit) on why DooD was not a good fit for them.

Secure Docker-in-Docker with Nestybox

As described above, both the Docker DinD image and Docker-out-of-Docker approaches have some important drawbacks.

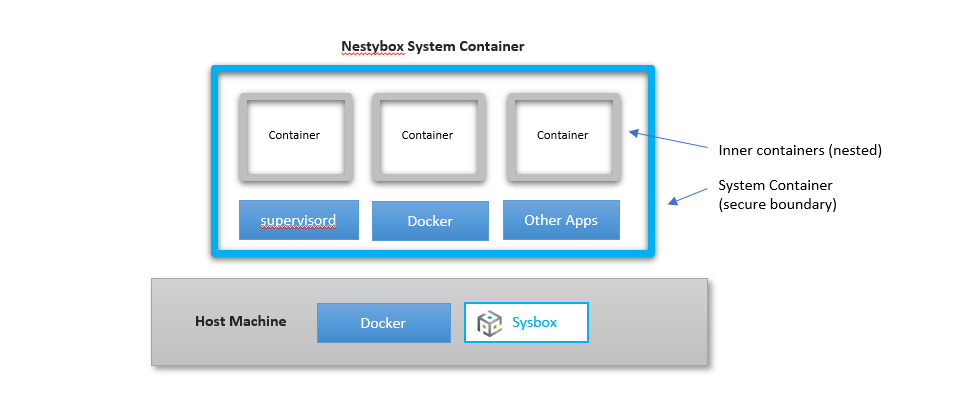

Nestybox offers an alternative solution that overcomes these drawbacks: run Docker-in-Docker using system containers. In other words, use Docker to deploy a system container, and run Docker inside the system container.

Within a Nestybox system container you are able to run Docker inside the container easily and securely, with total isolation between the Docker inside the system container and the Docker on the host. No need for privileged containers anymore.

You avoid the issues with Docker-out-of-Docker and enable use of Docker-in-Docker in environments where the workloads are untrusted.

Our solution is very simple to use:

1) Install Nestybox’s system container runtime “Sysbox” on your Linux machine.

- Our download page contains all the instructions. It installs very quickly, integrates with Docker, and works under the covers.

2) Use Docker to launch the system container just as any other container.

- You only need to pass the

--runtime=sysbox-runcflag to Docker. That’s all.

For example, we can leverage Docker’s official DinD image and run it

in a system container. The instructions are similar to those in the Docker hub site

except that we have removed the Docker run --privileged flag and replaced it with

the --runtime=sysbox-runc flag.

First, launch the system container with the DinD image (the image has the Docker daemon in it):

$ docker network create some-network

$ docker run --runtime=sysbox-runc \

--name dind-syscont -d \

--network some-network --network-alias docker \

-e DOCKER_TLS_CERTDIR=/certs \

-v dind-syscont-certs-ca:/certs/ca \

-v dind-syscont-certs-client:/certs/client \

docker:dind

Then launch a regular container with the Docker client in it.

$ docker run -it --rm \

--network some-network \

-e DOCKER_TLS_CERTDIR=/certs \

-v dind-syscont-certs-client:/certs/client:ro \

docker:latest sh

/ #

Notice that the client container need not be a system container because it’s only running the Docker CLI in it, not the Docker daemon (although you could launch it as a system container if you want the extra security provided by the system container’s Linux user namespace).

Once you have the Docker client running, you can now send requests to the Docker daemon system container. For example, to create an inner busybox container:

/ # docker run -it busybox

Unable to find image 'busybox:latest' locally

latest: Pulling from library/busybox

7c9d20b9b6cd: Pull complete

Digest: sha256:fe301db49df08c384001ed752dff6d52b4305a73a7f608f21528048e8a08b51e

Status: Downloaded newer image for busybox:latest

/ #

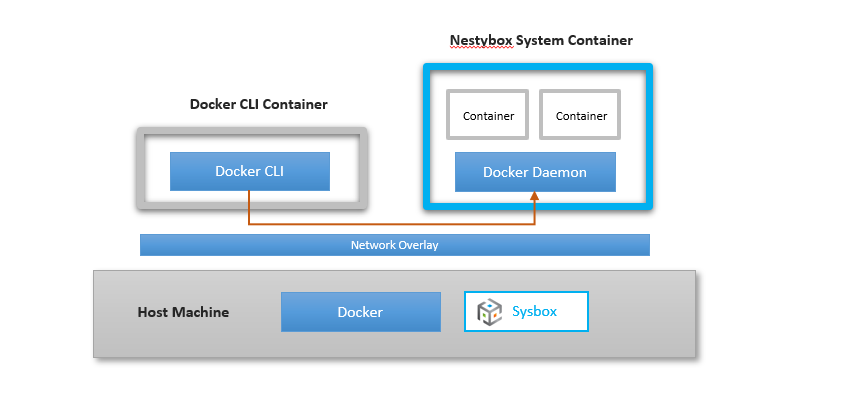

The above example leverages Docker’s official DinD image, and uses two containers as shown below (one with the Docker daemon and one with the Docker CLI).

Alternatively you could also create a single system container image that works as a Docker sandbox, inside of which you can run Docker (both the CLI and the daemon) as well as any other programs you want. This Nestybox blog article has examples.

In a Nutshell

-

There are valid use cases for running Docker-in-Docker (DinD).

-

Docker’s officially supported DinD solution requires a privileged container. It’s not ideal. It may be fine in trusted scenarios, but it’s risky otherwise.

-

There is an alternative that consists of running only the Docker CLI in a container and connecting it with the Docker daemon on the host. It’s nicknamed Docker-out-of-Docker (DooD). While it has some benefits, it also has several drawbacks which may void it’s use depending on your environment.

-

Nestybox system containers offer a new alternative. They support running Docker-in-Docker securely, without using privileged containers and with total isolation between the Docker in the system container and the Docker on the host. It’s very easy to use; in fact you use the same command as running Docker’s official DinD image, except that don’t need the

--privilegedflag. -

Nestybox is looking for early adopters to try our system containers. Try our Free Trial, and give us your feedback!

Checkout our Free Trial!

We have developed a prototype and are looking for early adopters.

Give our free trial a shot, we think you’ll find it useful.

Your feedback would be much appreciated!