Securing GitLab CI pipelines with Sysbox

October 21, 2020

Intro

Continuous integration (CI) jobs often require interaction with Docker, either for building Docker images and/or deploying Docker containers.

One of the most popular DevOps tools for CI is GitLab, as it offers a complete suite of tools for the DevOps lifecycle. While GitLab is an excellent tool suite, it offers weak security when running CI jobs that require interaction with Docker.

These security weaknesses allow CI jobs, whether inadvertently or maliciously, to perform root level operations on the machine where the job executes, thus compromising the stability of the CI infrastructure and possibly beyond.

This article explains these security issues and shows how the Sysbox container runtime, developed by Nestybox, can be used to harden the security of these CI jobs while at the same time empowering users to create powerful CI pipeline configurations with Docker.

TL;DR

The article is a bit long as it starts by giving a detailed explanation of the security related problems for GitLab jobs that require interaction with Docker.

If you understand these problems already, you may want to jump directly to the solution in section Securing GitLab with Sysbox.

Contents

- Security Problems with GitLab + Docker

- Security issues with the Shell Executor

- Security issues with the Docker Executor

- A word on Kaniko

- Securing GitLab with Sysbox

- Inner Docker Image Caching

- Setups that (currently) don’t work

- Conclusion

- Useful Links

Security Problems with GitLab + Docker

It is common for CI jobs to require interaction with Docker, often to build

container images and/or to deploy containers. These jobs are typically composed

of steps executing Docker commands such as docker build, docker push, or

docker run.

In GitLab, CI jobs are executed by the “GitLab Runner”, an agent that installs on a host machine and executes jobs as directed by the GitLab server (which normally runs in a separate host).

The GitLab runner supports multiple “executors”, each of which represents a different environment for running the jobs.

For CI jobs that interact with Docker, GitLab recommends one of the following executor types:

-

The Shell Executor

-

The Docker Executor

Both of these however suffer from weak security for jobs that interact with Docker, meaning that such jobs can easily gain root level access to the machine where the job is executing, as explained below.

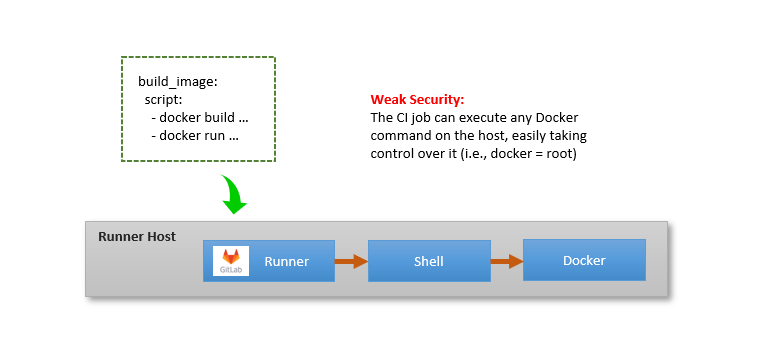

Security issues with the Shell Executor

When using the shell executor, the CI job is composed of shell commands executed in the same context as the GitLab runner.

The diagram below shows the context in which the job executes:

A sample .gitlab-ci.yaml looks like this:

build_image:

script:

- docker build -t my-docker-image .

- docker run my-docker-image /script/to/run/tests

The shell executor is powerful due to the flexibility of the shell, but it suffers from a few problems:

1) The job executes within the GitLab runner’s host environment, which may or may not be clean (e.g., depending on the state left by prior jobs).

2) Any job dependencies must be pre-installed into the runner machine a priori.

3) If the job needs to interact with Docker, the GitLab runner needs to be added

to the docker group, which in essence grants root level access

to the job on the runner machine.

Thus, from a security perspective, the shell executor is not a good idea for jobs that interact with Docker.

For example, the CI job could easily take over the runner machine by executing a

command such as docker run --privileged -v /:/mnt alpine <some-cmd>. In such

a container, the job will have unfettered root level access to the entire

filesystem of the runner machine via the /mnt directory in the container.

Security issues with the Docker Executor

When using the Docker executor, the CI job runs within one or more Docker containers. This solves problems (1) and (2) of the shell executor (see prior section), as you get a clean environment prepackaged with your job’s dependencies.

However, if the CI job needs to interact with Docker itself (e.g. to build Docker images and/or deploy containers), things get tricky.

In order for such a job to run, the job needs access to a Docker engine. GitLab recommends two ways to do this:

1) Binding the host’s Docker socket into the job container

2) Using a Docker-in-Docker (DinD) “service” container

Unfortunately, both of these are unsecure setups that easily allow the job to take control of the runner machine, as described below.

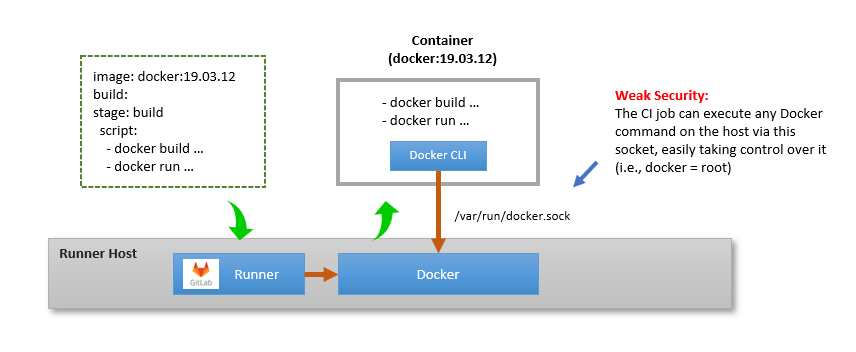

Binding the host Docker Socket into the Job Container

This setup is shown below.

A sample .gitlab-ci.yaml looks like this:

image: docker:19.03.12

build:

stage: build

script:

- docker build -t my-docker-image .

- docker run my-docker-image /script/to/run/tests

As shown the Docker container running the job has access to the host

machine’s Docker daemon via a bind-mount of /var/run/docker.sock.

To do this, you must configure the Gitlab runner as follows (pay

attention to the volumes clause):

[[runners]]

url = "https://gitlab.com/"

token = REGISTRATION_TOKEN

executor = "docker"

[runners.docker]

tls_verify = false

image = "docker:19.03.12"

privileged = false

disable_cache = false

volumes = ["/var/run/docker.sock:/var/run/docker.sock", "/cache"]

This is the so-called “Docker-out-of-Docker” (DooD) approach: the CI job and Docker CLI run inside a container, but the commands are executed by a Docker engine at host level.

From a security perspective, this setup is not kosher: the container running the CI job has access to the Docker engine on the runner machine, in essence granting root level access to the CI job on that machine.

For example, the CI job can easily gain control of the host machine by creating

a privileged Docker container with a command such as docker run --privileged

-v /:/mnt alpine <some-cmd>. Or the job can remove all containers on the

runner machine with a simple docker rm -f $(docker ps -a -q) command.

In addition, the DooD approach also suffers from context problems: the Docker commands are issued from within the job container, but are executed by a Docker engine at host level (i.e., in a different context). This can lead to collisions among jobs (e.g., two jobs running concurrently may collide by creating containers with the same name). Also, mounting files or directories to the created containers can be tricky since the contexts of the job and Docker engine are different.

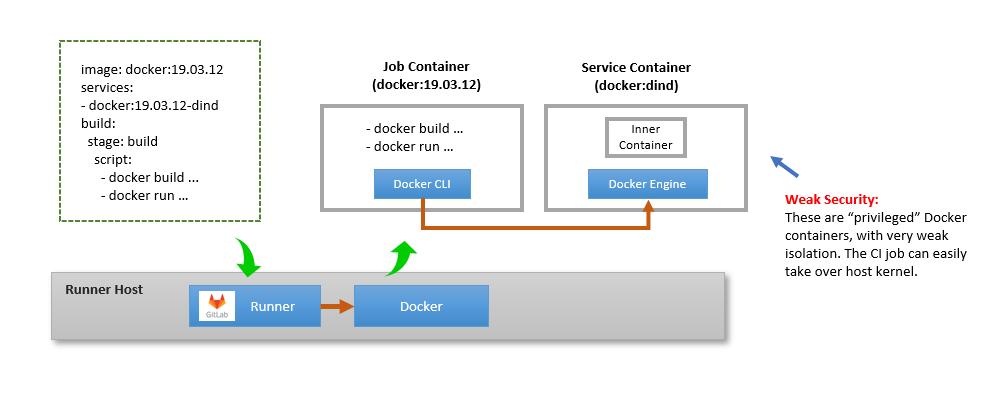

Using a Docker-in-Docker Service Container

This setup is shown below.

A sample .gitlab-ci.yaml looks like this:

image: docker:19.03.12

services:

- docker:19.03.12-dind

build:

stage: build

script:

- docker build -t my-docker-image .

- docker run my-docker-image /script/to/run/tests

As shown, GitLab deploys the job container alongside a “service” container. The latter contains within it a Docker engine, using the “Docker-in-Docker” (DinD) approach.

This gives the CI job a dedicated Docker engine, thus preventing the CI job from accessing the host’s Docker engine. In doing so, it prevents the collision problems described in the prior section (though the problems related to mounting files or directories remain).

To do this, just must configure the Gitlab runner as follows (pay

attention to the privileged and volumes clauses):

[[runners]]

url = "https://gitlab.com/"

token = REGISTRATION_TOKEN

executor = "docker"

[runners.docker]

tls_verify = true

image = "docker:19.03.12"

privileged = true

disable_cache = false

volumes = ["/certs/client", "/cache"]

The volumes clause must include the /certs/client mount in order to enable

the job container and service container to share Docker TLS credentials.

But notice the privileged clause: it’s telling GitLab to use privileged Docker

containers for the job container and the service container. This is needed

because the service container runs the Docker daemon inside, and normally this

requires unsecure privileged containers (though Sysbox removes this requirement

as you’ll see a bit later).

Privileged containers weaken security significantly. For example the CI job can

easily control the host machine’s kernel by executing a docker run --privileged

alpine <cmd> where <cmd> will have full read/write access to the machine’s

/proc/ filesystem and thus able to perform all sorts of low-level kernel

operations (including turning off the runner machine for example).

A word on Kaniko

If you simply want your CI job to build Docker images but not interact with Docker in other ways, a good alternative is Kaniko. It’s a tool that runs inside a container and is capable of building Docker images without a Docker daemon. See here for info on how to use it with GitLab.

From a security perspective, Kaniko avoids the problems described in prior sections because the CI job need not communicate with a Docker daemon.

The drawback is that it’s only a solution if your CI job needs to build images, but it’s not a solution if your CI jobs needs to use Docker for more advanced setups (e.g., deploying the built containers with Docker compose for example).

For CI jobs that require communication with Docker, the Sysbox container runtime enables simple yet powerful and secure solutions, as described next.

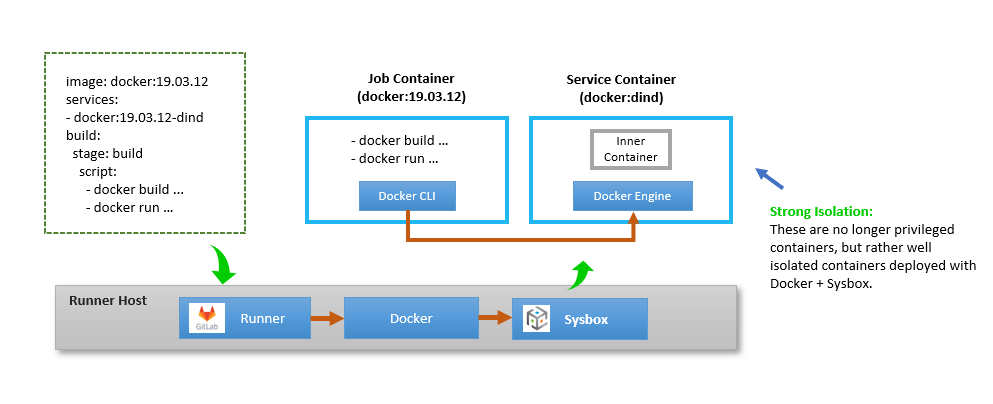

Securing GitLab with Sysbox

The Sysbox container runtime offers a solution to the security problems described above.

It does so by virtue of enabling containers to run “system software” such as Docker, easily and with proper container isolation (e.g., without resorting to privileged containers). We call these system containers.

There are a couple of ways in which Sysbox can be used to better secure GitLab CI jobs that interact with Docker:

-

GitLab runner on host deploys jobs in system containers

-

GitLab runner & Docker execute inside a system container

The sections below describe both of these approaches.

GitLab Runner Deploys Jobs in System Containers

The setup for this is shown below.

As shown, the runner machine has the GitLab runner agent, Docker, and Sysbox installed.

The goal is for the GitLab runner to execute jobs inside containers deployed with Docker + Sysbox. This way, CI jobs that require interaction with Docker can use the Docker-in-Docker service container, knowing that it will be properly isolated from the host (because Sysbox enables Docker-in-Docker securely).

In order for this to happen, one has to configure the GitLab runner to select Sysbox as the container “runtime” and disable the use of “privileged” containers.

Here is the runner’s config file (at /etc/gitlab-runner/config.toml). Pay

attention to the privileged and runtime clauses:

[[runners]]

url = "https://gitlab.com/"

token = REGISTRATION_TOKEN

executor = "docker"

[runners.docker]

tls_verify = true

image = "docker:19.03.12"

privileged = false

disable_cache = false

volumes = ["/certs/client", "/cache"]

runtime = "sysbox-runc"

Unfortunately there is a small wrinkle (for now at least): the GitLab runner currently has a weird behavior in which the “runtime” configuration is honored for the job containers but not honored for the “service” containers, which is a problem since the DinD service container is precisely the one we must run with Sysbox (to enable secure Docker-in-Docker). This appears to be a bug in the GitLab runner.

As a work-around, you can configure the Docker engine on the runner machine

to select Sysbox as the “default runtime”. You do this by configuring

the /etc/docker/daemon.json file as follows (pay attention to the default-runtime clause):

{

"default-runtime": "sysbox-runc",

"runtimes": {

"sysbox-runc": {

"path": "/usr/local/sbin/sysbox-runc"

}

}

}

After this, restart Docker (e.g., sudo systemctl restart docker).

From now on, all Docker containers launched on the host will use Sysbox by default (rather than the OCI runc) and thus will be capable of running all jobs, including those using Docker-in-Docker with proper isolation.

With this configuration in place, the following CI job runs seamlessly and securely:

image: docker:19.03.12

services:

- docker:19.03.12-dind

build:

stage: build

script:

- docker build -t my-docker-image .

- docker run my-docker-image /script/to/run/tests

With this setup you can be sure that your CI jobs are well isolated from the underlying host. Gone are the privileged containers that previously compromised host security for such jobs.

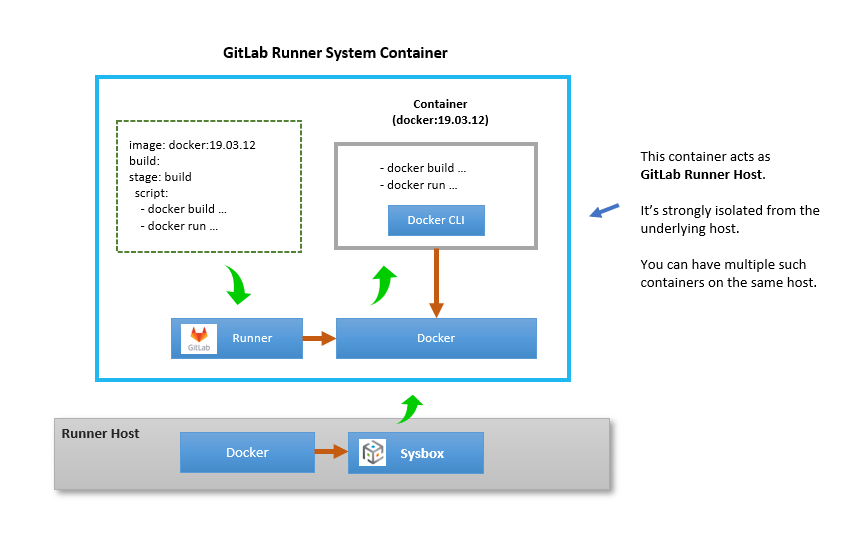

GitLab Runner & Docker in a System Container

The setup for this is shown below.

In this approach, we deploy the GitLab runner plus a Docker engine inside a system container. It follows that the CI jobs run inside that system container too, in total isolation from the underlying host.

In other words, the system container is acting like a GitLab runner “virtual host” (much like virtual machine, but using fast & efficient containers instead of hardware virtualization).

Compared to the solution in the previous section, this approach has some benefits:

-

It allows the GitLab CI jobs to use the shell executor or Docker executor (either the DooD or DinD approaches) without compromising host security, because the system container provides a strong isolation boundary.

-

You can run many GitLab runners on the same host machine, in full isolation from one another. This way, you can easily deploy multiple customized GitLab runners on the same machine as you see fit, giving you more flexibility and improving machine utilization.

-

You can easily deploy this system container on bare-metal machines, VMs in the cloud, or any other machine where Linux, Docker, and Sysbox are running. It’s a self-contained and complete GitLab runner + Docker environment.

But there is a drawback:

- For CI jobs that interact with Docker, the isolation boundary is at the system container level rather than at the job level. That is, such a CI job could easily gain control of the system container and thus compromise the GitLab runner environment, but not the underlying host.

Creating this setup is easy.

First, you need a system container image that includes the GitLab runner and a Docker engine. There is a sample image in the Nestybox Dockerhub Repo; the Dockerfile is here.

As you can see, the Dockerfile is very simple: it takes GitLab’s dockerized runner image, adds a Docker engine to it, and modifies the entrypoint to start Docker. That’s all … easy peasy.

You deploy this on the host machine using Docker + Sysbox:

$ docker run --runtime=sysbox-runc -d --name gitlab-runner --restart always -v /srv/gitlab-runner/config:/etc/gitlab-runner nestybox/gitlab-runner-docker

Then you register the runner with your GitLab server:

$ docker run --rm -it -v /srv/gitlab-runner/config:/etc/gitlab-runner gitlab/gitlab-runner register

You then configure the GitLab runner as usual. For example, you can enable the Docker executor

with the DooD approach by editing the /srv/gitlab-runner/config/config.toml file as follows:

[[runners]]

name = "syscont-runner-docker"

url = "https://gitlab.com/"

token = REGISTRATION_TOKEN

executor = "docker"

[runners.docker]

tls_verify = false

image = "docker:19.03.12"

privileged = false

disable_cache = false

volumes = ["/var/run/docker.sock:/var/run/docker.sock", "/cache"]

Then restart the gitlab-runner container:

$ docker restart gitlab-runner

At this point you have the GitLab runner system container ready. You can then request GitLab to deploy jobs to this runner, knowing that the jobs will run inside the system container, in full isolation from the underlying host.

In the example above we used the DooD approach inside the system container, but we could have chosen the DinD approach too. The choice is up to you based on the pros/cons of DooD vs DinD as described above.

If you use the DinD approach, notice that the DinD containers will be privileged, but these privileged containers live inside the system container, so they are well isolated from the underlying host.

Inner Docker Image Caching

One of the drawbacks of placing the Docker daemon inside a container is that containers are non-persistent by default, so any images downloaded by the containerized Docker daemon will be lost when the container is destroyed. In other words, the containerized Docker daemon’s cache is ephemeral.

If you wish to retain the containerized Docker’s image cache, you can do so by

bind-mounting a host volume into the /var/lib/docker directory of the

container that has the Docker daemon inside.

For example, when using the approach in section GitLab Runner Deploys Jobs in System Containers

you do this by modifying the GitLab runner’s config

(/etc/gitlab-runner/config.toml) as follows (notice the addition of

/var/lib/docker to the volumes clause):

[[runners]]

url = "https://gitlab.com/"

token = REGISTRATION_TOKEN

executor = "docker"

[runners.docker]

tls_verify = true

image = "docker:19.03.12"

privileged = false

disable_cache = false

volumes = ["/certs/client", "/cache", "/var/lib/docker"]

runtime = "sysbox-runc"

This way, when the GitLab runner deploys the job and service containers, it

bind-mounts a host volume (created automatically by Docker) into the container’s

/var/lib/docker directory. This way, container images downloaded by the Docker

daemon inside the service container will remain cached at host level across CI

jobs.

As another example, if you are using the approach in section GitLab Runner & Docker in a System Container,

then you do this by launching the system container with the following command

(notice the volume mount on /var/lib/docker):

$ docker run --runtime=sysbox-runc -d --name gitlab-runner --restart always -v /srv/gitlab-runner/config:/etc/gitlab-runner -v inner-docker-cache:/var/lib/docker nestybox/gitlab-runner-docker

A couple of important notes:

-

By making the containerized Docker’s image cache persistent, you are not just persisting images downloaded by the containerized Docker daemon; you are persisting the entire state of that Docker daemon (i.e., stopped containers, volumes, networks, etc.) Keep this in mind to make sure you CI jobs persist the state that you wish to persist, and explicitly cleanup any state you wish to not persist across CI jobs.

-

A given host volume bind-mounted into a system container’s

/var/lib/dockermust only be mounted on a single system container at any given time. This is a restriction imposed by the inner Docker daemon, which does not allow its image cache to be shared concurrently among multiple daemon instances. Sysbox will check for violations of this rule and report an appropriate error during system container creation.

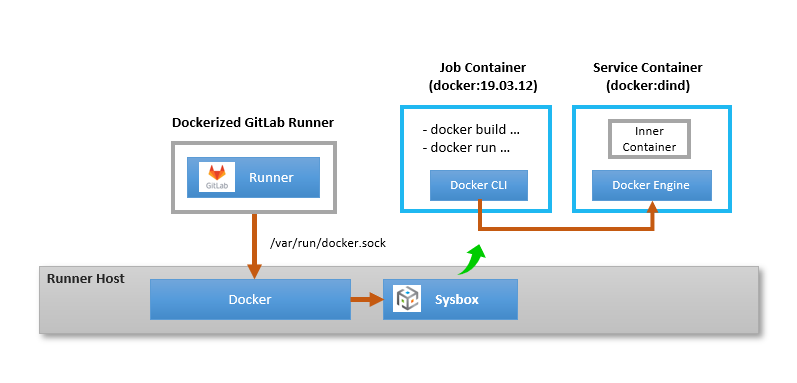

Setups that (currently) don’t work

One setup that currently doesn’t work is to use the official Dockerized GitLab Runner image, connect it to the host’s Docker socket, and have it deploy jobs with Sysbox.

It’s similar to the setup in section GitLab Runner Deploys Jobs in System Containers above, but uses the dockerized GitLab runner instead of installing it on the host directly.

Unfortunately this does not work yet because the goal is to have GitLab use Sysbox for the DinD service container. To do this, you would normally configure the GitLab runner “runtime” to Sysbox.

However, as mentioned previously, the GitLab runner has a weird behavior: it does not honor the runner’s “runtime” configuration for service containers. This in turn forces you to configure Sysbox as Docker’s “default runtime” on the host.

But configuring Sysbox as the default runtime on the host means that the

Dockerized GitLab runner will be in a container deployed with Sysbox. This

prevents the runner from starting correctly because it won’t have permission to

talk to the Docker engine on the host via the mounted /var/run/docker.sock.

That’s because Sysbox creates containers using the Linux user-namespace, which

means the root in the container maps to an unprivileged user on the host, and

that unprivileged user has no permission to access the host’s Docker socket.

We will work with GitLab to resolve this (i.e., to have the GitLab runner honor the runtime configuration for service containers too). Once this is fixed, Sysbox need not be configured as Docker’s “default runtime” on the host, which means the GitLab runner will be in a regular container, while the job and DinD containers will be in containers deployed by Sysbox, making things work as intended.

Conclusion

If you have GitLab jobs that require interaction with Docker, be aware that these jobs can easily compromise the security of the host on which they run, thus compromising the stability of your CI infrastructure and possibly beyond.

You can significantly improve job isolation by using Docker in conjunction with the Sysbox container runtime. This article showed a couple of different ways of doing this.

We hope you find this information useful. If you see anything that can be improved or if you have any comments, do let us know!

Happy CI/CD’ing with GitLab and Sysbox! ![]()